Assistive robots will need to interact with articulated objects such as cabinets or

microwaves. Early work

on creating systems for doing so used proprioceptive sensing to estimate joint mechanisms

during contact.

However, nowadays, almost all systems use only vision and no longer consider proprioceptive

information

during contact. We believe that proprioceptive information during contact is a valuable

source of

information and did not find clear motivation for not using it in the literature. Therefore,

in this

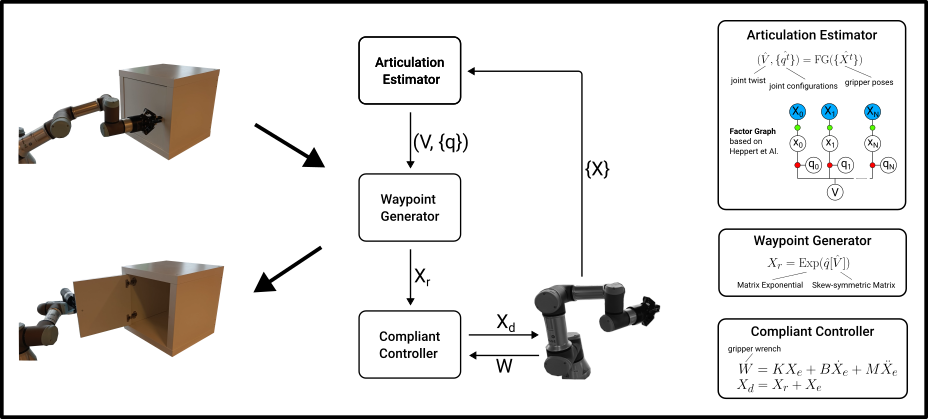

paper, we create a system that, starting from a given grasp, uses proprioceptive sensing to

open cabinets

with a position-controlled robot and a parallel gripper. We perform a qualitative evaluation

of this

system, where we find that slip between the gripper and handle limits the performance.

Nonetheless, we

find that the system already performs quite well. This poses the question: should we make

more use of

proprioceptive information during contact in articulated object manipulation systems, or is

it not worth

the added complexity, and can we manage with vision alone? We do not have an answer to this

question, but

we hope to spark some discussion on the matter.